New Multimodal AI Architecture Dramatically Improves Reasoning, Memory, and Tool Use

A new multimodal AI architecture improves reasoning, long-term memory, and tool usage, advancing technical AI capabilities.

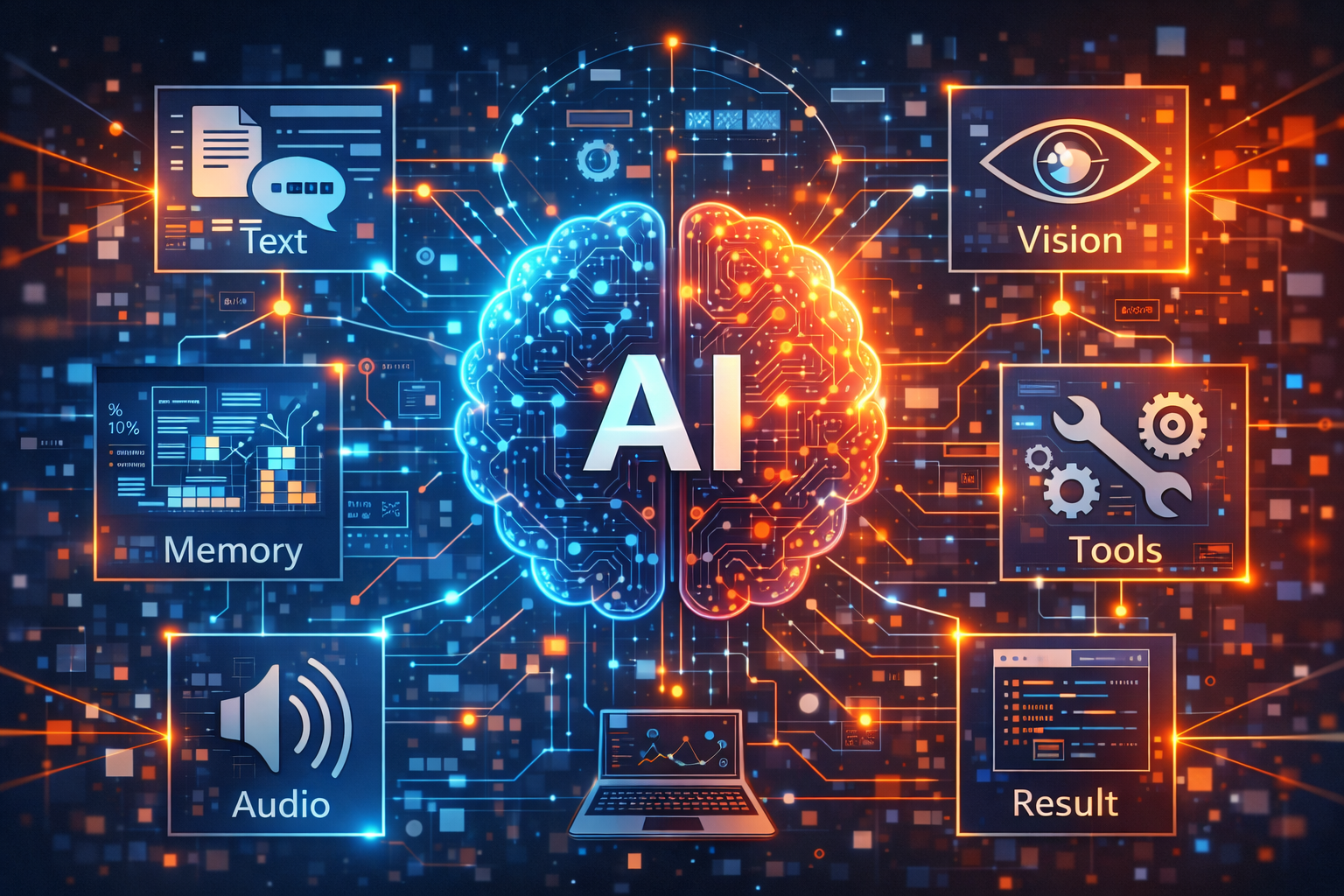

Researchers have introduced a next-generation multimodal AI architecture that significantly advances machine reasoning, long-term memory, and real-time tool usage. Unlike previous models that processed text, images, or audio in isolation, this architecture natively fuses multiple data types into a unified reasoning pipeline, allowing the system to understand complex tasks more holistically.

The model uses a layered cognitive stack that separates perception, reasoning, memory, and action. Visual and audio inputs are first encoded into shared semantic representations, which are then passed into a reasoning core capable of step-by-step logical planning. This core can dynamically decide when to retrieve stored memories, query external tools, or request additional context before producing an output.

A key technical breakthrough lies in its long-term memory mechanism. Instead of relying solely on context windows, the system maintains a structured memory graph that stores facts, events, and prior decisions. This allows the AI to recall information across extended interactions, enabling persistent learning and continuity that was previously difficult to achieve.

The architecture also introduces a secure tool-execution layer. The AI can call APIs, run code, and interact with databases while remaining sandboxed within predefined safety constraints. Engineers report that this reduces hallucinations in data-driven tasks and improves accuracy in workflows such as data analysis, software debugging, and automated research.

Early benchmarks show substantial improvements in complex problem-solving, including mathematical reasoning, multi-step planning, and cross-domain inference. In internal tests, the system outperformed existing large language models in tasks that required combining visual data, numerical analysis, and logical reasoning.

Experts believe this architecture represents a foundational shift toward more general-purpose AI systems. By integrating perception, memory, reasoning, and action into a single framework, the model moves closer to autonomous systems capable of operating reliably in real-world, high-stakes environments.